When it comes to AI changing the tech world, we’re at a juncture where you’ve either:

- seen some shit with your own eyes or you believe your imagination of where this is going

- or, you haven’t and you’re dubious

It feels like people are living in a fractured reality. For the moment, that is stable. But reality is not completely subjective. The way we each get to live in the world is shaped by things outside of our subjective perspective. That is going to hit all of us, regardless of what we believe.

Reality is subjective-ish

We all know the term “perception is reality”. It observes the fact that humans don’t need to actually agree on truth, in many situations. Sometimes, you’re better off meeting people where they are in their subjective reality than trying to change it. For instance, whether I believe the Earth is a sphere or flat disk has no direct bearing on my life1, unless I need to fly from Rio to Sydney or sail around Antarctica.

However, when it comes to perspectives on AI, objective truth matters to all of us who do knowledge work, because the truth determines whether we can make a living in the long-run.

“What’s this all about”, you might ask?

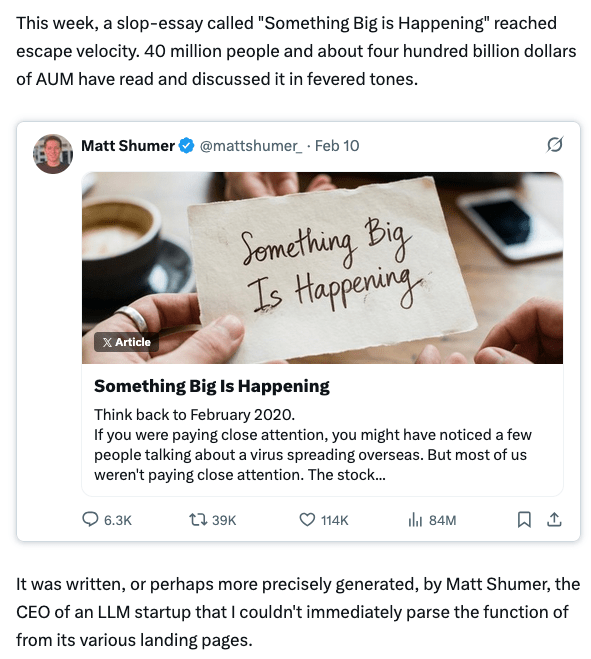

Last week, this piece dropped: https://shumer.dev/something-big-is-happening.

A few days later, it was rebuked: https://x.com/willmanidis/status/2021655191901155534?s=46

I read both pieces. Will Manaidis could be correct that the first piece is self-serving AI-generated agitprop. I don’t know.

But it doesn’t mean that the first piece is wrong. In fact, I believe Matt Schumer is largely right.

My reality

I’ve seen some shit. There is now the capability to generate code at a huge multiple of what was possible before. I’m going to call it 10x, because I think that’s roughly accurate for me. In particular, the first draft of a solution to a task or bug is vastly faster. Often, the code archaeology and solution design that would have taken a couple weeks is compressed into an hour.

Now, this doesn’t mean I’m shipping 10x. I find that incomplete work piles up in the final stages. Rework is usually required cross the finish line. But I’m shipping maybe 2-3x, and I have every reason to believe I will do much better as tooling, process, models, prompting, team organization, and my own hand-on experience improve–simultaneously.

I work on a mature codebase, with millions of users. Changes are risky. This slows things down. For people working on greenfield projects, I have seen levels of productivity beyond 10x what used to be possible.

None of this is magical or free. I’m pretty sure I’m spending hundreds of dollars per day on AI tokens on behalf of my company. But my company has made the decision that the benefits of adapting to the new tooling vastly outweigh today’s costs. I think they’re right. Those benefits are in the form of today’s productivity in getting new value to market, and in being prepared for the changes tomorrow will bring.

What’s next

I’ll make one specific prediction: by mid-2026, every developer who figures out the new tooling will be 4x as productive as they were one year prior, especially if they are in an engineering org that is committed to adopting and adapting. This is just one of many potential impacts of AI, but this alone would rock the entire software industry, and the world beyond.

I believe that in most such organizations, it will eventually be “up or out” for engineers. You had better either be as productive as your peers or be irreplaceable in some other way, otherwise, it will be hard to justify your employment. Productivity will be measured against the fully loaded cost of the developer: compensation plus tooling spend. (Tooling spend used to be negligible compared to compensation, but no longer.) Measurement of developer productivity is notoriously hard, but it will be noticeable who is producing at the new expectation or not. Smart organizations will do whatever they can to support the evolution of their people, but at some point, the music will stop for people who aren’t onboard.

Longer-term I think we’re going to see seismic shifts in industry, broadly speaking. There are companies where the new level of productivity will unlock the ability to ship things that used to be beyond the horizon on the product roadmap. These places will still be glad to have tons of engineers. Other places will find they can get by with fewer. We’re going to see companies rise and fall at a much more rapid pace over the next few years.

This is happening

So much of the conversation I see online questions whether this is all real. I get that. I was a skeptic, too. If you haven’t seen what’s possible up close, it’s easy to write this off as hype. But the discussion is asymmetric. I’m arguing that there exists the ability to ship at a multiple of what was possible a year before. I only need one example to be correct, and I’m speaking from what I have observed with my own eyes. The counterargument is that this is not possible any time soon, and that is a much higher burden of proof.

Many don’t want to believe it. It threatens their identity as coders. It threatens the way they make a living. It challenges their ethics. They are motivated to reason against this. But not wanting to believe something won’t make it false.

I don’t know whether any of this is a good thing. I am fearful, on multiple levels2. But that is an independent question from what I believe is the reality of what is happening. I am trying to be prepared, and I suggest that everyone else do likewise. Now is the time to educate yourself, to build your hands-on experience, to share knowledge, and to invest in your relationships.

- I realized when arguing with a flat-earth-believing loved one that my certainty that the Earth is round comes not from lived experience, but from trust in my chain of knowledge. ↩︎

- Dario Amodei, founder of leading AI lab Anthropic, wrote an essay on the dangers of this moment in history, which covers just some of my concerns: https://www.darioamodei.com/essay/the-adolescence-of-technology ↩︎